Happy 2018 everyone! I wanted to share something I have been testing at the request of a few customers that have been interested in using Tintri Cloud Connector. I will preface this with the simple statement that this is currently unsupported, however I am working on that within the Tintri walls. What makes Tintri Cloud Connector unique is that it natively interacts with the S3 API. Therefore, as long as an S3 target is 100% S3 API compliant there is no reason I have found for this not to work. In fact, I have tested with multiple targets as you will see.

When Tintri Cloud Connector was first released last year the integration was for AWS S3 in their public cloud and for IBM Cloud Object Storage which is essentially on premises S3 target. So I got to thinking…..as did a few customers, what about other S3 compliant targets such as:

- Minio (used in products like FreeNAS or stand alone)

- Cloudian

- Dell EMC ECS

- Scality S3 Server

- Cohesity S3

- Ceph (Tested by a Co-Worker)

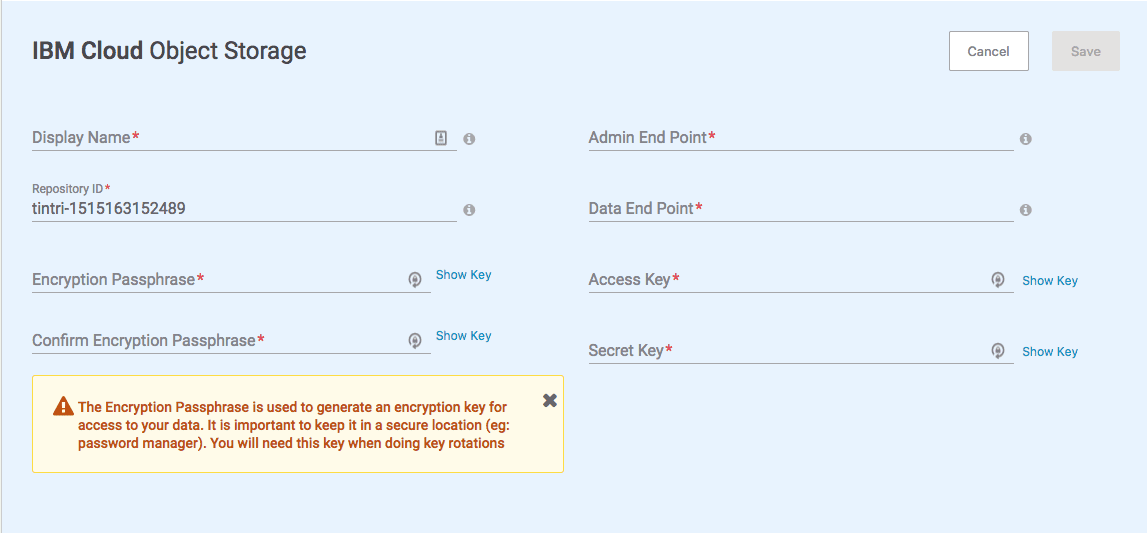

Most of these have been requested by one or more customers so I decided to give things a try to stand as many of them up as possible. As of today I have almost all of them running with successful replication from Tintri VMstores, and I have also tested the download restores. So what’s the trick to making all these work? It’s rather simple if you want to try it. In a past blog post I showed how to configure Tintri Cloud Connector for AWS S3. To set up these other options you simply use the IBM COS option.

For each solution the specifics such as the endpoint and port will vary as will the keys of course. Generally here is a few of the vendors formats I have tested for reference.

- (Minio) http://<ip_Address or hostname>:9000

- (Scality S3) http://<ip_Address or hostname>:8000

- (Cloudian) http://<MUST BE FQDN>

- (DELL EMC ECS) http://<ip_Address or hostname>:9020

- (Ceph) http://<ip_Address or hostname>:7480

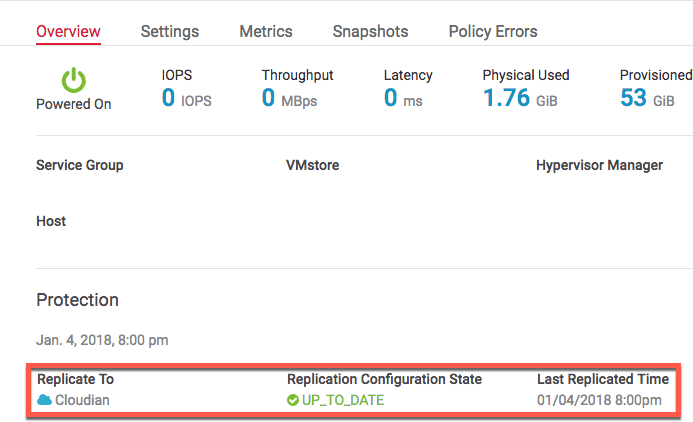

In all but one in the case of Cloudian can you use the IP address or hostname. They specifically require some added DNS and the use of FQDN. Also some of these have HTTPS options as well, but the takeaway here is the format for all must be http(s)://<ip_Address or hostname>:<Port> in the Admin and Data End point. In all my test cases the same was used for both and it’s working fine. From there you configure the replication same as I showed in the previous article and point to the new on premises S3 target. Below you can see an example of a machine replicating to Cloudian

You can also replicate to more than one cloud destination, however it it important to point out that the current maximum number of cloud destinations is four. If you are currently running Tintri Global Center 3.7 and Tintri OS 4.4 you have access to test out Tintri Cloud Connector. Now if you also have an on premises S3 target either including the ones I have tested or not you can give this a try. There is multiple use cases why customers want to use Cloud Connector in this way and having access to more on premises S3 target options is very useful.

Once more testing is done I hope to get some official support for most if not all of these other 100% compliant S3 API targets. It’s actually pretty cool to get some of these stood up for various other reasons. Oh and if you are a Mac user Cyberduck is a life saver and worth the money in the Mac App Store!

Chris Colotti's Blog Thoughts and Theories About…

Chris Colotti's Blog Thoughts and Theories About…

Hi Chris, Thank you. This is truly AWESOME stuff! TGC service group replication is working perfect. Do you know if i can extend the S3 blob storage from within Minio or in my case FreeNAS with Minio service activated where all FreeNAS disks are “local” on vmdk. Lets say i have 10TB S3 disk (as vmdk) and need to extend with additional 10TB. I extend the vmdk to 20TB and do gpart cmd in shell so that freeNAS acknowledge the new disk, so far so good. But how can i make S3 Volume bigger so that the Minio S3 service also sees the new disk space? So far I have not been able to figure this one out… Any help or hint would be greatly appreciated.

That’s a really good question. I’m not sure how Minio or FreeNas with Minio can expand the disks. I’ve only used Minio as a docker container with fixed disk for testing. I’d suggest posting maybe on the FreeNas forums. I suspect Minio on FreeNas is just accessing the base disk storage not a sub carved amount of disk so you would probably have to expand the primary storage and Minio can just access it. Again in speculating but I’m sure FreeNas forums could be of some help.

Glad it’s working! It’s a great solution to use with Tintri cloud Connector for sure .

Looks like I was on the right track. Did some digging in my lab and the Minio side like the other services, (NFS, SMB, Etc) all work off the base disk and the /mnt folder.

I was able to locate this on expanding the disks https://forums.freenas.org/index.php?threads/expand-resize-single-disk.1660/

Or you have to remove and add new disk, or add new disk and migrate data and move the Minio directory location

Thanks Chris, yes that was the post i followed to extend the zfs drive with gpart but it does not extend the S3 capacity it still original size. Since this procedure seems to be unsupported i will instead set up four FreeNAS VMs each with 25TB thin provisioned vmdks from the start. And once those “blobs” runs out of free space i will spin up and Scale Out to another FreeNAS VM S3 blob so that i never have to expand the local S3 (vmdk) disk and risk getting xxTB corrupt filesystem. 🙂 I´m looking forward offloading long term snaps from our T850 Hybrids since they take heavy punishment from replicated VMs with LOTS of change over 30 days time ie SQL servers and other disk intensive app servers. The AFA boxes has dedup so they handle theese kinds of VMs much better. But still, long term storing of synthetic VMs on Tintri is waste of precious high performance disk. This is where our Netcrap boxes finally can come to good use -as on prem S3 long term storage.^^

Interesting. Minio must lock itself to the original mount sizing. I know with the docket version it’s fixed but never tried the Linux version which is most likely what FreeNas is using. Someone on the Minio forums might know or their GIT page open an issue just to see if it’s possible.

Hi,

And thanks Chris. Today I can confirm it works fine with NetApp StorageGRID Webscale as a S3 endpoint. Important to issue a real/trusted/vendor CA-issued SSL Certificate. Self-signed SSL Cert or internal/private CA-issued SSL cert will not work when connecting from Tintri to NetApp SGW. In Netapp SGW you need to import 3-files, the certfile, key-file and PEM-file to get it right.

* (NetApp SGW) https://:8082

//Thomas Spindler, Business Development Manager

Solid Park in Sweden

Awesome! Yes i ran into the SSL issue in local testing as well, but most of my “on prem” tests were non SSL which always works. So you are aware I have opened up a bug on this to see if we can get it addressed at some point on a future update.